Projects

Date: April 2024- Ongoing

Student Team: Barbara Neda Martinez

Faculty Collaborators: Sergio Gago-Masague, Jennifer Wong-Ma

Description: This research project seeks to improve undergraduate retention rates in Computer Science for students from underrepresented communities by using a student-centric, data-driven approach. The project will utilize predictive modeling to identify at-risk students and implement evidence-based interventions, such as math tutoring, wellness workshops, and mentorship. The project has three aims: assessing the impact of interventions, refining them using mixed-method analyses, and evaluating sustainability and broader impact. Outcomes will be shared through open-source resources and presentations, aiming to enhance retention and diversity in STEM at Hispanic-Serving Institutions.

Investigating the Role of Socioeconomic Factors on CS1 Performance

Date: Fall 2022 – Ongoing

Student Team: Barbara Martinez Neda, Jason Lee Weber, Kitana Carbajal Juarez, Flor Morales

Faculty Collaborators: Sergio Gago-Masague, Jennifer Wong-Ma

Description: This research focuses on improving the academic success of Computer Science students at the University of California, Irvine, with an emphasis on reducing high academic probation rates, particularly among underrepresented groups (URG). By surveying CS1 students, the study explores how socioeconomic factors like mental health and academic preparedness affect outcomes. The findings will inform targeted support and improve predictive models, enhancing academic success through more inclusive and tailored interventions.

Publications

- Investigating the Role of Socioeconomic Factors on CS1 Performance. B Martinez Neda, F Morales, K Carbajal Juarez, J Wong-Ma, S Gago-Masague. 2024 IEEE Global Engineering Education (EDUCON)

- Testing Machine Learning Models to Identify Computer Science Students at High-risk of Probation. H Errahmouni Barkam, M Wang, B Martinez Neda, S Gago-Masague. Proceedings of the 53rd ACM Technical Symposium on Computer Science Education, 1161

- Using machine learning in admissions: Reducing human and algorithmic bias in the selection process. B Martinez Neda, Y Zeng, S Gago-Masague. Proceedings of the 52nd ACM Technical Symposium on Computer Science Education, 1323

- (Short Paper/Posters) Impacts of Academic Preparedness on CS1 Performance. B Martinez Neda, F Morales, K Carbajal Juarez, J Wong-Ma, S Gago-Masague. Proceedings of the 55th ACM Technical Symposium on Computer Science Education

- Admission Prediction in Undergraduate Applications: an Interpretable Deep Learning Approach. A Priyadarshini, B Martinez Neda, S Gago-Masague. 2023 International Conference on Transdisciplinary AI (TransAI), 135-140

- Staying Ahead of the Curve: Early Prediction of Academic Probation among First-Year CS Students. B Martinez Neda, M Wang, A Singh, S Gago-Masague, J Wong-Ma. 2023 International Conference on Applied Artificial Intelligence (ICAPAI), 1-7

- Revisiting Academic Probation in CS: At-Risk Indicators and Impact on Student Success. B Martinez Neda, M Wang, HE Barkam, J Wong-Ma, S Gago-Masague. 2023 Journal of Computing Sciences in Colleges, 8-16

- Machine Learning with Keyword Analysis for Supporting Holistic Undergraduate Admissions in Computer Science. B Martinez Neda. 2022 University of California, Irvine ProQuest Dissertations & Theses

- Feasibility of Machine Learning Support for Holistic Review of Undergraduate Applications. B Martinez Neda, S Gago-Masague. 2022 International Conference on Applied Artificial Intelligence (ICAPAI), 1-6

Extracting Information from Undergraduate Admissions Essays to Predict Academic Performance in CS

Date: March 2024 – Ongoing

Student Team: Barbara Martinez Neda, Joshua Kawaguchi, Kai Yu, Jason Lee Weber

Faculty Collaborators: Sergio Gago-Masague, Jennifer Wong-Ma

Description: This research focuses on extracting topics from students’ admissions essays using Latent Dirichlet Allocation (LDA) and BERTopic. Our goal is to uncover patterns in student writing that may offer insights into potential academic challenges they might face once enrolled. By identifying student challenges early on in students’ careers, our research could allow for tailored support that can maximize students’ academic success.

Developing a lightweight LLM Tool to Assist Course Staff in Answering Students’ Administrative Questions

Date: January 2024 – Ongoing

Student Team: Jason Lee Weber, Barbara Martinez Neda, Ulises Maldonado, Alessio Diaz Cama, Justin Tian Jin Chen, Agustín Angulo

Faculty Collaborators: Sergio Gago-Masague, Jennifer Wong-Ma

Description: This project develops a virtual assistant to automate responses to student questions about course logistics. By referencing the course syllabus and calendar through a lightweight Large Language Model (LLM) and Retrieval-Augmented Generation (RAG) system, the assistant provides accurate, timely answers, reducing the workload on course staff. Designed to run efficiently on consumer hardware, it offers a cost-effective solution without the need for expensive API access to State-of-the-Art models like GPT-4.

Investigating Student Perceptions and Usage of Large Language Models in CS Education

Date: January 2023 – Ongoing

Student Team: Jason Lee Weber, Barbara Martinez Neda, Kitana Carbajal Juarez

Faculty Collaborators: Sergio Gago-Masague, Jennifer Wong-Ma, Hadar Ziv

Description: This project investigates the adoption and perception of Large Language Models (LLMs) by collegiate students. Through a large-scale survey of 760 participants—including intro-sequence students, experienced students, and faculty—we explore differing views on the educational benefits of LLMs, as well as their reliability and impact on academic integrity. While experienced students quickly embrace LLMs, faculty and newer students remain cautious. The findings highlight the need for careful, ethical integration of LLMs in education to balance their potential with concerns around their use.

Publications

- Beyond the Hype: Perceptions and Realities of Using Large Language Models in Computer Science Education at an R1 University. JL Weber, B Martinez Neda, K Carbajal Juarez, J Wong-Ma, S Gago-Masague, H Ziv. 2024 IEEE Global Engineering Education (EDUCON)

- Measuring CS Student Attitudes Toward Large Language Models. JL Weber, B Martinez Neda, K Carbajal Juarez, J Wong-Ma, S Gago-Masague, H Ziv. Proceedings of the 55th ACM Technical Symposium on Computer Science Education

Student Project Matching Tool (SPMT) for Capstone Projects

(ICS Research Award 2023)

Date: January 2023 – Ongoing

Student Team: Jason Lee Weber, Barbara Martinez Neda

Faculty Collaborators: Sergio Gago-Masague, Jennifer Wong-Ma

Description: The Student-Project Matching Tool (SPMT) is an algorithmic tool developed for a software engineering capstone course to optimize student-project pairings. It aligns students with industry-sponsored projects based on their interests and skills, addressing the challenge of forming effective teams. Students select desired learning outcomes from a predefined list of software engineering categories, and the SPMT calculates optimal matches between student interests and project requirements. Preliminary results show increased student satisfaction, proficiency growth, and positive sponsor feedback.

Publications

- WIP: Maximizing Individual Learning Goals Through Customized Student-Project Matching (SPM) in CS Capstone Projects. B Martinez Neda, JL Weber, S Gago-Masague, J Wong-Ma. Accepted at FIE 2024.

- Enhancing Learning in CS Capstone courses through Advanced Project Matching. B Martinez Neda, JL Weber, S Gago-Masague, J Wong-Ma. 2024 Journal of Computing Sciences in Colleges.

Student Evaluations – Summary and Sentiment

Date: January 2023 – Ongoing

Student Team: Aditya Phatak, Suvir Bajaj, Rutvik Gandhasri

Faculty Collaborators: Jennifer Wong-Ma, Pavan Kadandale, Hadar Ziv

Description: Student feedback plays a crucial role in course pedagogy and creating inclusive learning environments. Student evaluations of teaching and mid-term feedback surveys continue to be the de facto standard for collecting numerical and written comments. Courses with large enrollments, particularly introductory courses, are often left in the dark about true student perceptions and feedback due to the sheer volume of responses. Additionally, instructors may gain a false sense of the population based on the “loud” feedback within the written comments or the opinions of the small subset of students.

The goal of this project is to develop tools to assist faculty in deciphering the overall themes and sentiment from the student feedback in order to improve their teaching.

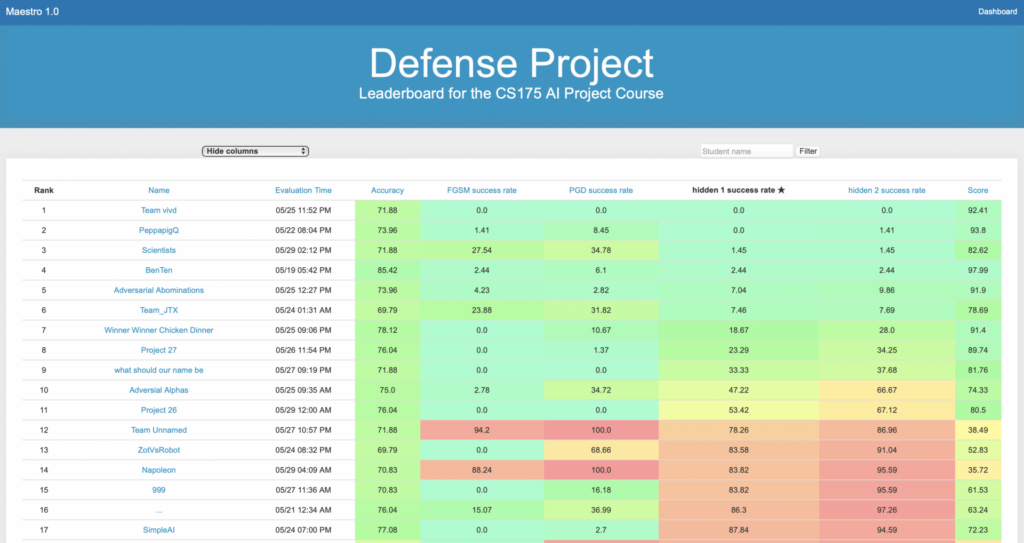

MAESTRO

(NSF # 2039634)

Date: August 2020 – Ongoing

Student Team: Margarita Geleta, Jiacen Xu, Manikanta Loya, Junlin Wang

Faculty Collaborators: Sameer Singh, Zhou Li

Description: MAESTRO is an educational tool for training courses in robust AI oriented to undergraduate and graduate students. It provides a friendly competitive programming environment for hands-on experience in adversarial AI. In a contextualized fashion (game-based assignments), students actively participate in competitive programming to build adversarial attacks and defenses, and using the MAESTRO leaderboard as a gamification tool, students can comprehensively compare their submissions with the baselines and the results of other students, effectively encouraging interaction and eagerness to learn. MAESTRO is open-source and available to researchers and educators as a resource for implementing and deploying successful teaching methods in AI-cybersecurity and robust AI.